Cost-Performance Analysis for Large-Scale Model Training on Matrice

Nov 5, 2024

Discover how Matrice optimizes AI model training costs and performance across leading cloud platforms.

Introduction

Building powerful AI solutions is a top priority for businesses, but the high costs of training models on large datasets can be a significant challenge. While smarter AI models often demand larger datasets, the computational resources required for training can strain budgets.

Enter Matrice, a no-code platform for computer vision models. Matrice allows users to build advanced AI solutions without deep coding expertise or extensive infrastructure. To back our claims, we conducted a comprehensive study to evaluate the cost and performance of training an object detection model (YOLOv8) on a dataset of 50,000 images. This blog shares the insights from our ablation study and demonstrates how Matrice helps businesses achieve their goals efficiently and cost-effectively.

Why Conduct This Ablation Study?

Training a model on 50,000 images isn’t just a test; it reflects real-world challenges faced by businesses daily. We sought to answer two critical questions:

How cost-efficient is training large datasets on Matrice?

How do different cloud providers and GPU configurations affect cost and training time?

Our findings empower businesses to make informed decisions by balancing speed, cost, and performance. Whether you’re racing against deadlines or optimizing a tight budget, Matrice’s platform adapts to your needs.

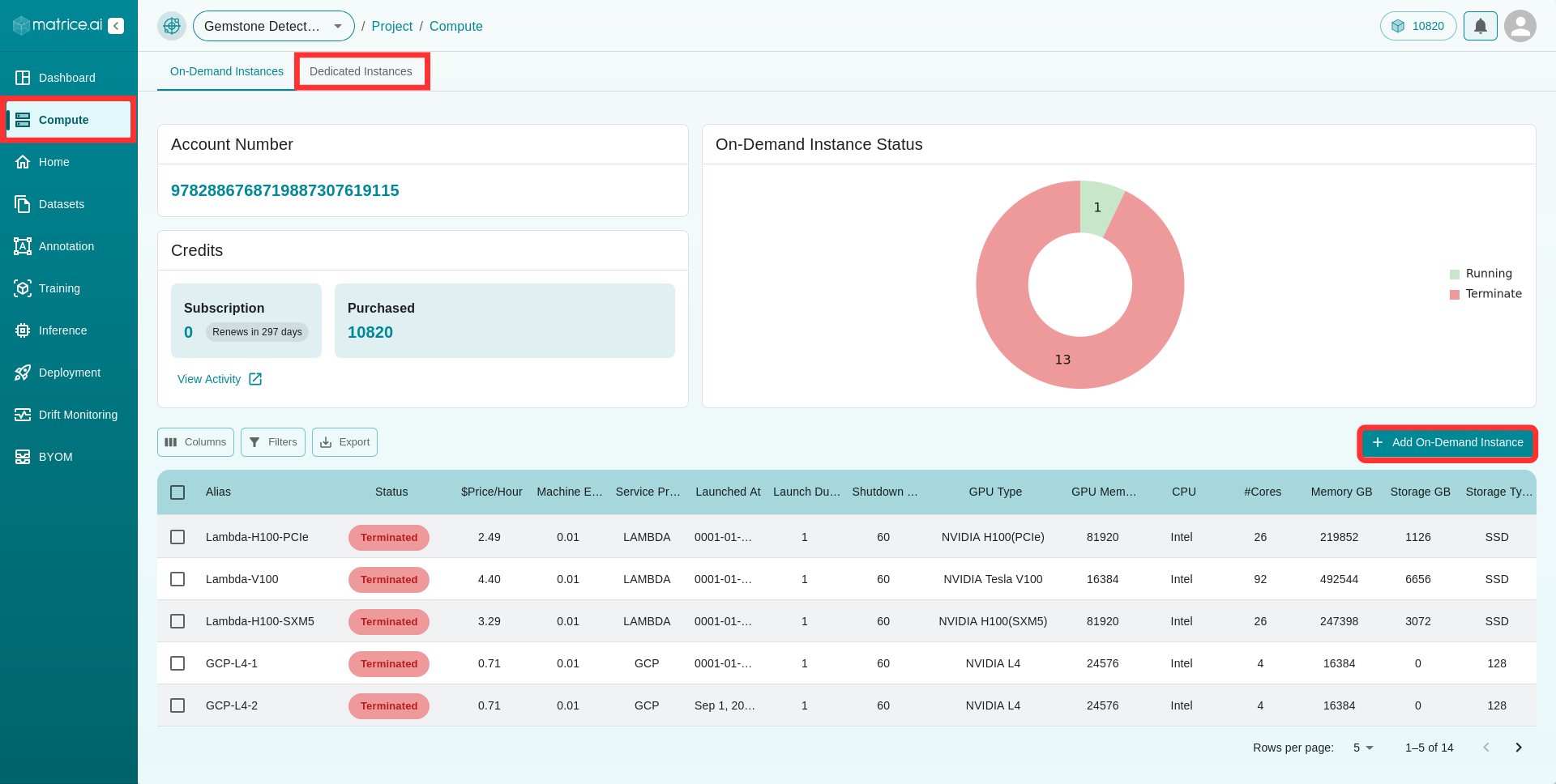

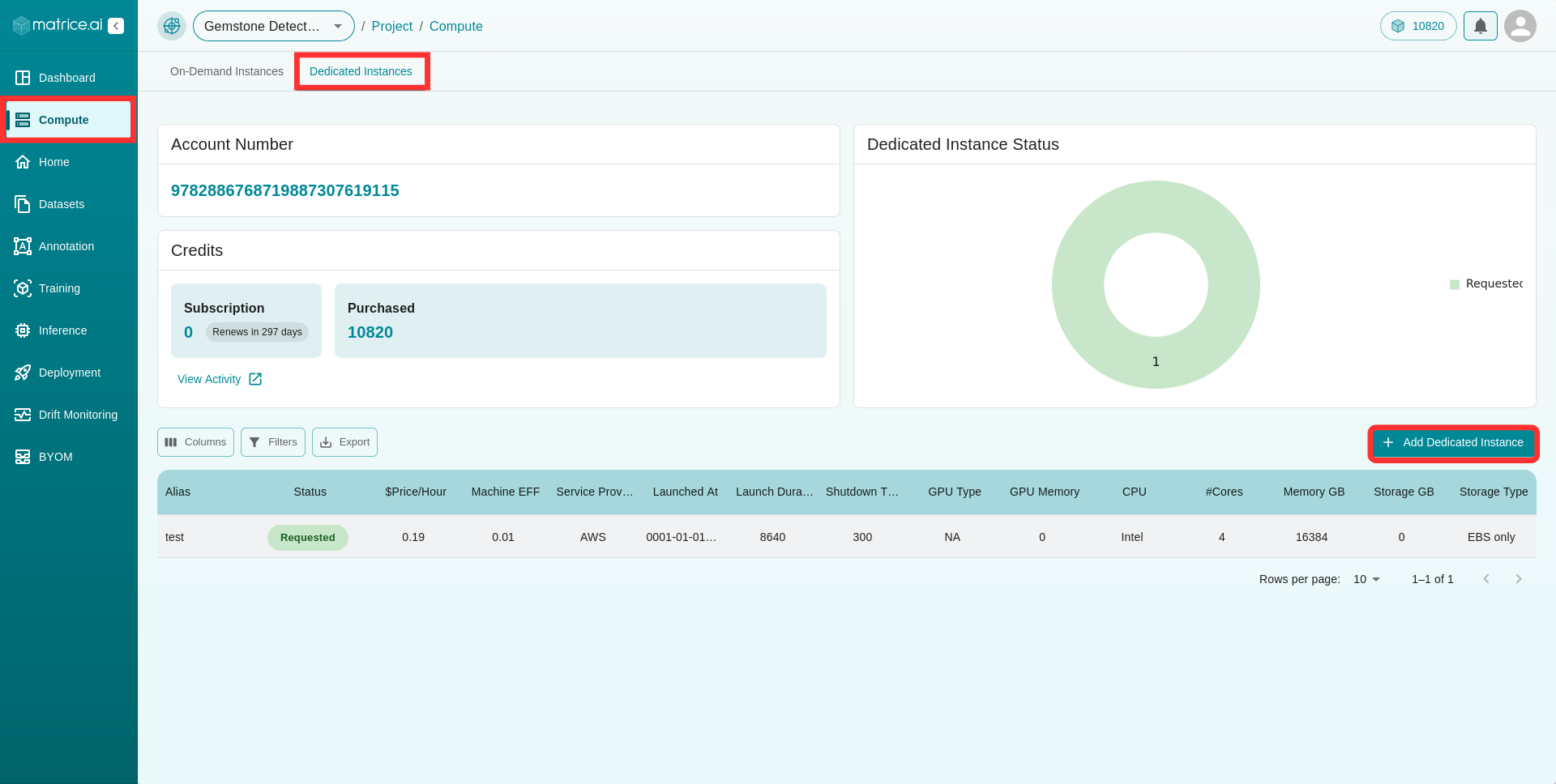

Matrice’s Compute Platform: Simplified Instance Selection

Matrice simplifies the process of selecting compute resources through an intuitive interface that offers two types of instances:

On-Demand Instances: Ideal for flexible timelines and smaller budgets, these instances are available on a first-come, first-serve basis.

Dedicated Instances: Exclusively allocated for enterprise users, ensuring consistent access to resources for mission-critical projects.

Launching an instance is as easy as clicking “Add On-Demand Instance” or “Add Dedicated Instance” and specifying basic details. Matrice’s advanced search options help you select the optimal instance based on performance, cost, and availability, making cloud infrastructure accessible to everyone.

Study Results and Observations

Our analysis provided valuable insights into training costs and performance across leading cloud providers:

Service Provider |

GPU Machine |

VRAM |

Dataset Size |

Batch Size |

Epochs |

Model Family |

Model Name |

Training Time |

Accuracy |

Credits Cost |

|---|---|---|---|---|---|---|---|---|---|---|

AWS |

V100 |

16GB |

50,000 |

32 |

90 |

YOLOv8 |

YOLOv8-s |

10 hrs |

0.97 |

44 |

OCI |

A10 |

24GB |

50,000 |

32 |

90 |

YOLOv8 |

YOLOv8-s |

8 hrs |

0.97 |

26 |

GCP |

L4 |

24GB |

50,000 |

32 |

90 |

YOLOv8 |

YOLOv8-s |

17 hrs |

0.97 |

16 |

Lambda |

H100 (SXM5) |

80GB |

50,000 |

32 |

90 |

YOLOv8 |

YOLOv8-s |

3 hrs |

0.98 |

13 |

Lambda |

H100 (SXM5) |

80GB |

50,000 |

128 |

90 |

YOLOv8 |

YOLOv8-s |

2 hrs 30 min |

0.98 |

11 |

Key Takeaways

Lambda’s H100: The Clear Winner

Fastest training time: 2.5 hours

Highest accuracy: 0.98

Most cost-effective: 11 credits

This configuration is ideal for teams balancing tight deadlines and budgets.

Batch Size Matters

By increasing the batch size from 32 to 128 on Lambda’s H100:

Costs were reduced by 17%.

Training time was shortened by 30 minutes.

Balancing Cost, Performance, and Reliability

Lambda Labs: Most economical and fastest, though on-demand availability can be limited. Dedicated instances resolve this issue for consistent access.

AWS and OCI: Offer high availability and reliability, making them suitable for continuous or real-time GPU access.

GCP: A budget-friendly choice for projects with flexible timelines, though training time is longer.

Making the Right Choice

Here’s how to choose the best configuration:

Need cost-efficient and fast training? Use Lambda’s H100.

Looking for lower costs with moderate training time? GCP is an excellent choice.

Prioritize reliability and resource availability? Opt for AWS or OCI.

Matrice’s platform integrates seamlessly with all providers, allowing users to adapt configurations as project needs evolve.

Conclusion

Matrice’s no-code platform empowers businesses to tackle large-scale AI projects with optimized costs and performance. With flexible compute options and actionable insights from our ablation study, Matrice ensures that you can train large datasets without exceeding your budget.

Explore Matrice today and transform your computer vision initiatives with a platform designed for cost-efficiency and adaptability.

Think CV, Think Matrice

Experience 40% faster deployment and slash development costs by 80%