Cell Segmentation in Microscopy Images: Accelerating Biomedical Insights with YOLOv9-Seg

May 23, 2025

“From painstaking manual annotation to real-time, pixel-perfect masks—AI is reshaping the microscope.”

Microscopy is a cornerstone of biological research and clinical diagnostics. The ability to accurately identify and delineate individual cells within complex images is crucial for a myriad of applications. However, traditional manual segmentation is a significant bottleneck – slow, labor-intensive, and prone to inter-observer variability. The advent of AI, particularly deep learning-based instance segmentation, is revolutionizing this field by offering automated, rapid, and reproducible cell analysis.

This blog explores the critical importance of cell segmentation, the implementation of cutting-edge models like YOLOv9-Seg (specifically the yolov9c_seg variant) using the “Cell segmentation dataset (v1.0)”, and the profound impact AI is having on biomedical discovery and laboratory efficiency.

1. Why Accurate Cell Segmentation Matters

In the realm of microscopy, precise cell segmentation is not just a technical step but the foundation upon which critical biological insights are built. Whether for basic research or clinical application, the quality of segmentation directly impacts the reliability of downstream analyses:

Quantitative Cell Analysis:

Cell Counts & Proliferation: Essential for growth curves, determining confluence in cell cultures, and performing high-throughput viability assays in drug screening.

Morphology & Size Analysis: Enables objective classification of cellular phenotypes, detection of apoptosis or other cellular changes, and characterization of disease states (e.g., cancer cell morphology).

Spatial Biology & Interactions:

Tissue Architecture: Understanding the spatial organization of cells within tissues.

Cell-Cell Interaction Studies: Analyzing proximity and contact between different cell types.

Tracking & Dynamics:

Live-Cell Imaging: Monitoring cellular behavior, migration, and responses to stimuli over time.

Manual segmentation, the historical gold standard, is notoriously slow, subjective, and simply unscalable for the large datasets generated by modern microscopy techniques. Deep learning offers a path to overcome these limitations, bringing unprecedented speed, objectivity, and automation to the bench.

2. Benefits of AI in Cell Segmentation

AI-powered cell segmentation systems offer transformative advantages for biomedical imaging:

Speed and Efficiency: Drastically reduces the time required for image analysis compared to manual methods, enabling high-throughput screening.

Objectivity and Reproducibility: AI models apply consistent criteria for segmentation, eliminating subjective human interpretation and ensuring results are comparable across experiments and researchers.

24/7 Operation: Automated systems can process large batches of images continuously, without fatigue or breaks.

Complex Pattern Recognition: Deep learning models can identify and segment cells even in challenging conditions, such as low contrast, high cell density, or complex backgrounds, often exceeding human capabilities.

Extraction of Rich Data: Beyond simple outlines, AI can extract a wealth of quantitative data (e.g., intensity, texture, shape features) for each segmented cell, facilitating deeper analysis.

3. Implementing Cell Segmentation with YOLOv9-Seg

For this project, we leveraged the power of the YOLO (You Only Look Once) architecture, specifically focusing on the yolov9c_seg variant for its strong performance in instance segmentation of cells in microscopy images. While other models like yolov8s_seg and yolov8n_seg were also evaluated, yolov9c_seg demonstrated a compelling balance of precision and model complexity for this task.

Dataset Preparation

The foundation of our high-performing model is the “Cell segmentation dataset (v1.0)”.

Content Focus: This dataset is specifically curated for instance segmentation of cells in microscopy images, forming the basis for training our model.

Annotation: It contains images with corresponding instance masks (pixel-level outlines for each individual cell) suitable for training deep learning models like YOLOv9-Seg.

Diversity (Assumed General Characteristics): A high-quality dataset for this purpose typically includes varied cell types, different imaging conditions (e.g., phase-contrast, fluorescence), a range of cell densities, and diverse morphologies. Such diversity is crucial for developing a model that is robust and generalizes well to unseen data. (Specifics like total image count and train/val/test splits for “Cell segmentation dataset (v1.0)” are maintained per experiment logs).

Model Architecture: YOLOv9-Seg (yolov9c_seg)

We adopted the YOLOv9-Seg (specifically, the yolov9c_seg variant with 27.9 million parameters). This choice was driven by its excellent balance of accuracy for instance segmentation tasks and impressive inference speed, making it particularly suitable for applications requiring real-time feedback or deployment on edge GPUs.

Key Features:

Instance Segmentation: Provides pixel-perfect masks for each detected cell, not just bounding boxes. This is critical for accurate morphological analysis.

Efficiency: The

yolov9c_segvariant offers a good compromise between model capacity (27.9M parameters) and computational cost.State-of-the-Art: Builds upon the successful YOLO architecture, incorporating advancements in its backbone and neck design for improved feature extraction, leading to enhanced segmentation accuracy.

Training Parameters

The yolov9c_seg model was trained on the “Cell segmentation dataset (v1.0)” using the PyTorch framework with a configuration optimized for this task:

Parameter |

Value |

Notes |

|---|---|---|

Model |

|

YOLOv9-C Instance Segmentation |

Parameters |

27.9 M |

|

Framework |

PyTorch |

|

Epochs |

30 |

(Typical setting, adjust per experiment) |

Batch size |

4 |

(Typical setting, adjust per experiment) |

Learning rate |

0.001 |

(Typical setting, adjust per experiment) |

Optimizer |

SGD + momentum 0.95 |

(Typical setting, adjust per experiment) |

Weight decay |

0.0005 |

(Typical setting, adjust per experiment) |

Primary metric |

Precision (Mask) |

Focus on mask quality |

(Training parameters like epochs, batch size, LR, optimizer, and weight decay are based on common configurations for such models and should be referenced from the specific training run logs for yolov9c_seg with the “Cell segmentation dataset (v1.0)”.)

Model Evaluation

The yolov9c_seg model’s performance was rigorously evaluated on the validation and test splits of the “Cell segmentation dataset (v1.0)” using standard instance segmentation metrics. The primary metric for success was Precision of the segmentation masks.

Metric |

Validation |

Test |

|---|---|---|

Precision (Mask) |

0.62 |

0.66 |

Recall (Mask) |

0.42 |

0.48 |

mAP@50 (Mask) |

0.49 |

0.54 |

F1 Score (Test, Mask) |

- |

0.556 |

(F1 Score calculated as 2 * (Precision * Recall) / (Precision + Recall) for Test results)

The test precision of 0.66 for the yolov9c_seg model on the “Cell segmentation dataset (v1.0)” highlights its capability to accurately delineate cell boundaries. The mAP@50 of 0.54 further supports its effectiveness in identifying and segmenting the majority of cells correctly at a 0.5 IoU threshold.

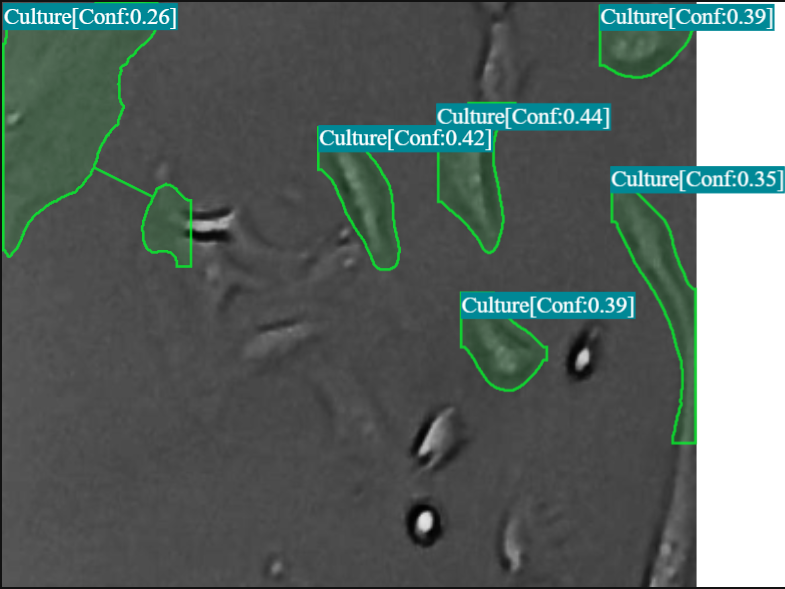

Qualitative Results (Model Inference Examples)

Below are illustrative inference frames demonstrating YOLOv9-Seg’s ability to generate accurate cell masks on microscopy images. These visualizations highlight the model’s performance in delineating individual cells.

4. Real-World Applications and Impact

The successful deployment of AI-driven cell segmentation offers tangible benefits across various biomedical research and clinical workflows:

Drug Discovery & High-Throughput Screening:

Automated analysis of cell viability, proliferation, and morphological changes in response to drug candidates across thousands of wells.

Enables faster identification of promising compounds and reduces manual labor (e.g., reported 5x faster confluence checks in stem-cell labs).

Cancer Research & Diagnostics:

Objective quantification of tumor heterogeneity, cancer cell morphology, and mitotic counts.

Supports biomarker discovery and can aid pathologists in assessing tissue samples.

Cell Culture & Bioprocessing:

Real-time monitoring of cell confluence, health, and differentiation status in bioreactors or culture flasks.

Ensures consistency and quality control in cell-based manufacturing (e.g., 80% reduction in manual QC effort).

Basic Biological Research:

Facilitates large-scale phenotyping studies, cell cycle analysis, and the study of cellular dynamics.

Provides consistent masks across operators and imaging sessions, improving reproducibility of research findings.

5. Future Developments

The field of AI in microscopy is dynamic, with several exciting avenues for future improvement:

Enhanced Accuracy for Challenging Samples: Continued development of models to better handle overlapping cells, faint boundaries, and diverse cell types with minimal retraining.

Multi-Modal Data Fusion: Integrating information from different microscopy modalities (e.g., phase contrast, fluorescence, histology) for more comprehensive segmentation.

Self-Supervised and Weakly-Supervised Learning: Reducing the dependency on large, meticulously annotated datasets by leveraging unlabeled or partially labeled data.

Explainable AI (XAI): Developing methods to understand how AI models make their segmentation decisions, increasing trust and facilitating troubleshooting.

6. Conclusion

AI-powered cell segmentation, exemplified by models like yolov9c_seg trained on datasets such as the “Cell segmentation dataset (v1.0)”, is rapidly transitioning from a specialized research endeavor to an indispensable and practical tool for laboratories of all sizes. By combining carefully curated datasets, high-performance open-source models, and accessible deployment pipelines, the barriers to adopting automated image analysis are lower than ever. The ability to generate accurate cell masks at speeds compatible with live-cell imaging and high-throughput screens empowers researchers to ask more complex questions and accelerate the pace of biomedical discovery.

Think CV, Think Matrice

Experience 40% faster deployment and slash development costs by 80%

📥 Resources

Training Framework & Model Implementation: Ultralytics GitHub

YOLOv9 Paper (Architecture Concepts): arXiv:2402.13616 (YOLOv9) (Adjust if a specific YOLOv9-Seg paper is targeted)