Facial Emotion Recognition: Understanding Human Emotions with AI

Facial emotion recognition (FER) is a fascinating application of artificial intelligence (AI) that enables computers to identify and interpret human emotions by analyzing facial expressions. This technology has wide-ranging applications, from improving customer service to enhancing mental health diagnostics and creating more responsive human-computer interactions. Using machine learning and computer vision, FER systems analyze visual cues to determine emotions like happiness, sadness, anger, surprise, and more.

Facial emotion recognition (FER) is a fascinating application of artificial intelligence (AI) that enables computers to identify and interpret human emotions by analyzing facial expressions. This technology has wide-ranging applications, from improving customer service to enhancing mental health diagnostics and creating more responsive human-computer interactions. Using machine learning and computer vision, FER systems analyze visual cues to determine emotions like happiness, sadness, anger, surprise, and more.

This blog explores the importance of facial emotion recognition, how AI-powered FER systems work, and the potential impact on industries and daily life.

1. Why Facial Emotion Recognition is Important

Human emotions are fundamental to how we communicate and interact. Understanding emotions can provide valuable insights for applications such as:

Customer Experience: FER can be used in retail to gauge customer satisfaction or in call centers to identify frustrated customers.

Education: In e-learning platforms, FER can monitor student engagement, helping educators understand when students are attentive or struggling.

Mental Health: By tracking emotional states over time, FER can assist in diagnosing and monitoring conditions like depression and anxiety.

Social Robotics: Robots equipped with FER can respond to human emotions in real-time, making them more empathetic and effective in roles like caregiving or companionship.

By enabling machines to “read” emotions, FER technology makes human-computer interactions more natural and intuitive.

2. Benefits of AI in Facial Emotion Recognition

FER technology provides several advantages:

Improved Decision-Making: By understanding emotions, FER can help businesses and service providers make better-informed decisions.

Enhanced User Experiences: FER adds a layer of emotional intelligence to applications, making interactions more user-friendly and adaptive.

Automation of Emotion Analysis: FER automates the previously subjective task of emotion analysis, providing consistent and objective assessments.

3. Implementing Facial Emotion Recognition with Matrice

Dataset Preparation

Model Training

Model Evaluation

Model Inference

Model Deployment

Dataset Preparation

The dataset consists of images capturing people displaying 7 distinct emotions (anger, contempt, disgust, fear, happiness, sadness and surprise). Each image in the dataset represents one of these specific emotions, enabling researchers and machine learning practitioners to study and develop models for emotion recognition and analysis.

Model Training

The model was trained using the following experiment parameters:

Parameter |

Value |

Description |

|---|---|---|

Model |

efficientnet_v2_s |

EfficientNetV2 Small variant - optimized for accuracy and efficiency |

Batch Size |

32 |

Number of samples processed in each training iteration |

Epochs |

100 |

Number of complete passes through the training dataset |

Learning Rate |

0.001 |

Initial learning rate for model optimization |

LR Gamma |

0.1 |

Multiplicative factor for learning rate decay |

LR Min |

0.000001 |

Minimum learning rate threshold |

LR Scheduler |

CosineAnnealingLR |

Cosine annealing learning rate scheduling |

LR Step Size |

5 |

Number of epochs between learning rate updates |

Min Delta |

0.0001 |

Minimum change in monitored quantity for early stopping |

Momentum |

0.9 |

Momentum coefficient for optimizer |

Optimizer |

AdamW |

AdamW optimizer with weight decay regularization |

Patience |

10 |

Number of epochs with no improvement before early stopping |

Primary Metric |

acc@1 |

Top-1 accuracy used for model evaluation |

Weight Decay |

0.001 |

L2 regularization factor to prevent overfitting |

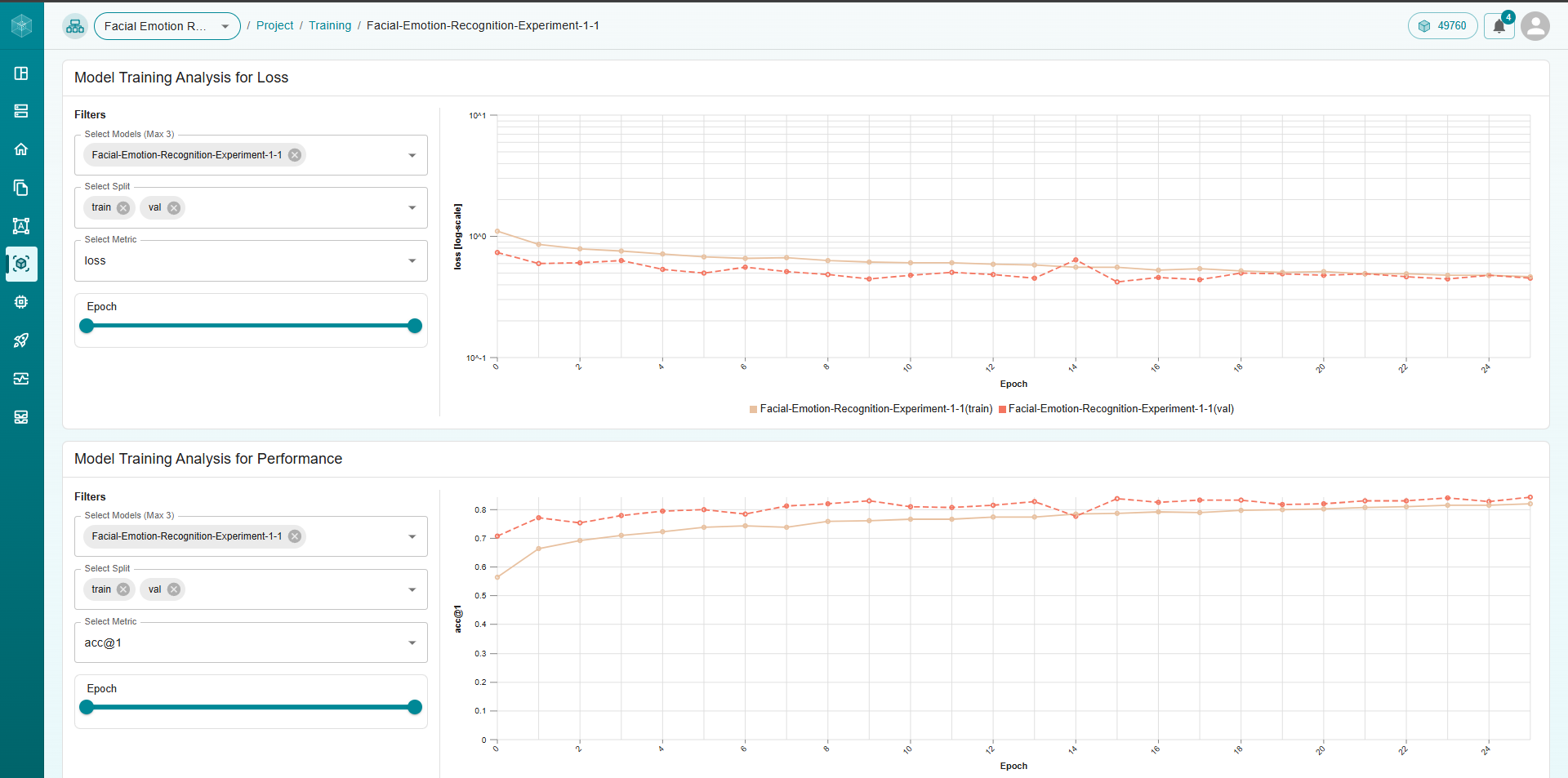

Model training graph from Matrice platform

Model Evaluation

Once training was complete, we evaluated the model using key performance metrics to ensure its effectiveness:

Accuracy@1: Measures the model’s top-1 prediction accuracy

Accuracy@5: Measures if the correct class appears in the model’s top 5 predictions

Precision: Indicates the proportion of correct positive predictions

Recall: Measures the proportion of actual positives correctly identified

Specificity: Measures the proportion of actual negatives correctly identified

F1 Score: The harmonic mean of precision and recall

Validation Results:

Metric |

All Categories |

Ahegao |

Angry |

Happy |

Neutral |

Sad |

Surprise |

|---|---|---|---|---|---|---|---|

Accuracy@1 |

0.843 |

0.995 |

0.964 |

0.971 |

0.884 |

0.901 |

0.971 |

Precision |

0.819 |

0.975 |

0.895 |

0.921 |

0.739 |

0.816 |

0.934 |

Recall |

0.880 |

0.967 |

0.649 |

0.963 |

0.858 |

0.789 |

0.691 |

F1 Score |

0.842 |

0.971 |

0.752 |

0.941 |

0.794 |

0.802 |

0.794 |

Specificity |

0.969 |

0.998 |

0.993 |

0.973 |

0.893 |

0.939 |

0.996 |

Test Results:

Metric |

All Categories |

Ahegao |

Angry |

Happy |

Neutral |

Sad |

Surprise |

|---|---|---|---|---|---|---|---|

Accuracy@1 |

0.841 |

0.992 |

0.966 |

0.972 |

0.881 |

0.896 |

0.973 |

Precision |

0.825 |

0.958 |

0.870 |

0.951 |

0.739 |

0.792 |

0.927 |

Recall |

0.873 |

0.942 |

0.712 |

0.933 |

0.842 |

0.802 |

0.718 |

F1 Score |

0.845 |

0.950 |

0.783 |

0.942 |

0.787 |

0.797 |

0.809 |

Specificity |

0.968 |

0.996 |

0.990 |

0.985 |

0.895 |

0.928 |

0.995 |

These metrics validate the model’s strong performance across all emotion categories, with particularly high accuracy in detecting emotions like Ahegao, Happy and Surprise. The model shows good balance between precision and recall, as reflected in the F1 scores, while maintaining high specificity across all categories.

Model Inference Optimization

A unique feature of our platform is the ability to export trained models to a variety of formats. For this use case, the model can be exported from PyTorch (.pt) format to formats like ONNX, TensorRT, and OpenVINO. This is particularly valuable for on-the-edge deployments, such as roadside cameras, where processing power may be limited.

By offering flexibility in model format, we ensure that models can be deployed in real-time settings without requiring extensive computational resources.

Model Deployment

Once the model is trained and optimized, deploying it is seamless with Matrice. Our platform supports real-time inference and allows integration via APIs for use in various applications.

You can use our pre-built API integration code for various programming languages, making it easy to computer vision functionality into web services, mobile apps, or custom applications.

4. Applications of Facial Emotion Recognition

Facial emotion recognition has transformative applications across several fields:

Marketing and Advertising: By analyzing audience reactions, FER can help brands measure emotional responses to ads, improving targeted marketing.

Healthcare and Therapy: FER assists in mental health assessments by analyzing changes in patients’ emotional expressions over time.

Gaming: FER enables games to adapt to players’ emotional states, creating more engaging and personalized experiences.

Security and Surveillance: FER can help detect unusual or suspicious behavior, potentially identifying threats based on emotional cues.

Human Resources: In hiring processes or employee well-being programs, FER can gauge candidate and employee responses to improve experiences.

Conclusion

Facial emotion recognition is an exciting AI-driven field that opens up new possibilities for making technology more emotionally aware and responsive. By bridging the gap between human emotions and machine understanding, FER can enhance a range of industries from healthcare to entertainment. As FER technology evolves, it promises to bring us closer to more empathetic, responsive, and effective human-computer interactions, ultimately improving the way we connect and communicate with technology.

Think CV, Think Matrice

Experience 40% faster deployment and slash development costs by 80%