Road Safety with Matrice: Detecting Distracted Drivers

Jan 13, 2025

Road safety is an essential concern, with distracted driving being a leading cause of accidents worldwide. Common distractions, such as texting, talking on the phone, or even adjusting the radio, significantly increase the risk of collisions. To combat this, advanced computer vision models can be used to monitor driver behavior in real-time, identifying distractions and alerting drivers to correct their actions promptly.

This article walks through the process of building a distracted driver detection model using Matrice’s no-code platform. We’ll cover each step, from dataset preparation to model deployment, and show how our solution can improve road safety by detecting driver distractions in various scenarios.

Dataset Preparation

1. Dataset Preview

To train an effective model, we need a dataset that represents various distracted driving behaviors. For this project, we have a dataset with images categorized into the following classes:

Drinking

Hair and makeup

Operating the radio

Reaching behind

Safe driving

Talking on the phone

Talking to a passenger

Texting

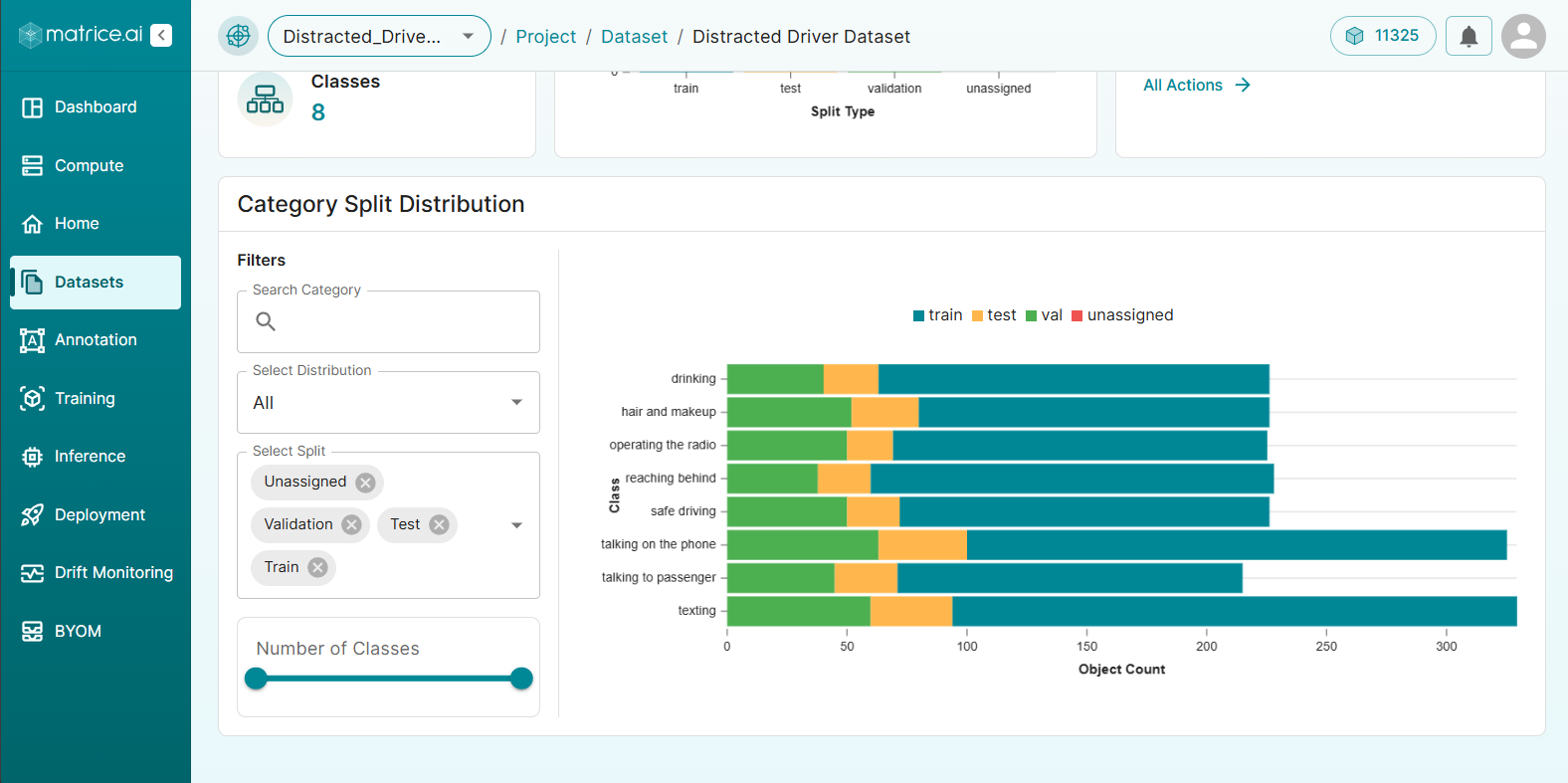

The dataset was split into training, validation, and test sets in a 70:20:10 ratio to ensure sufficient data for learning, validation, and fine-tuning, making the model generalize well to new data.

2. Categorical Distribution of Dataset

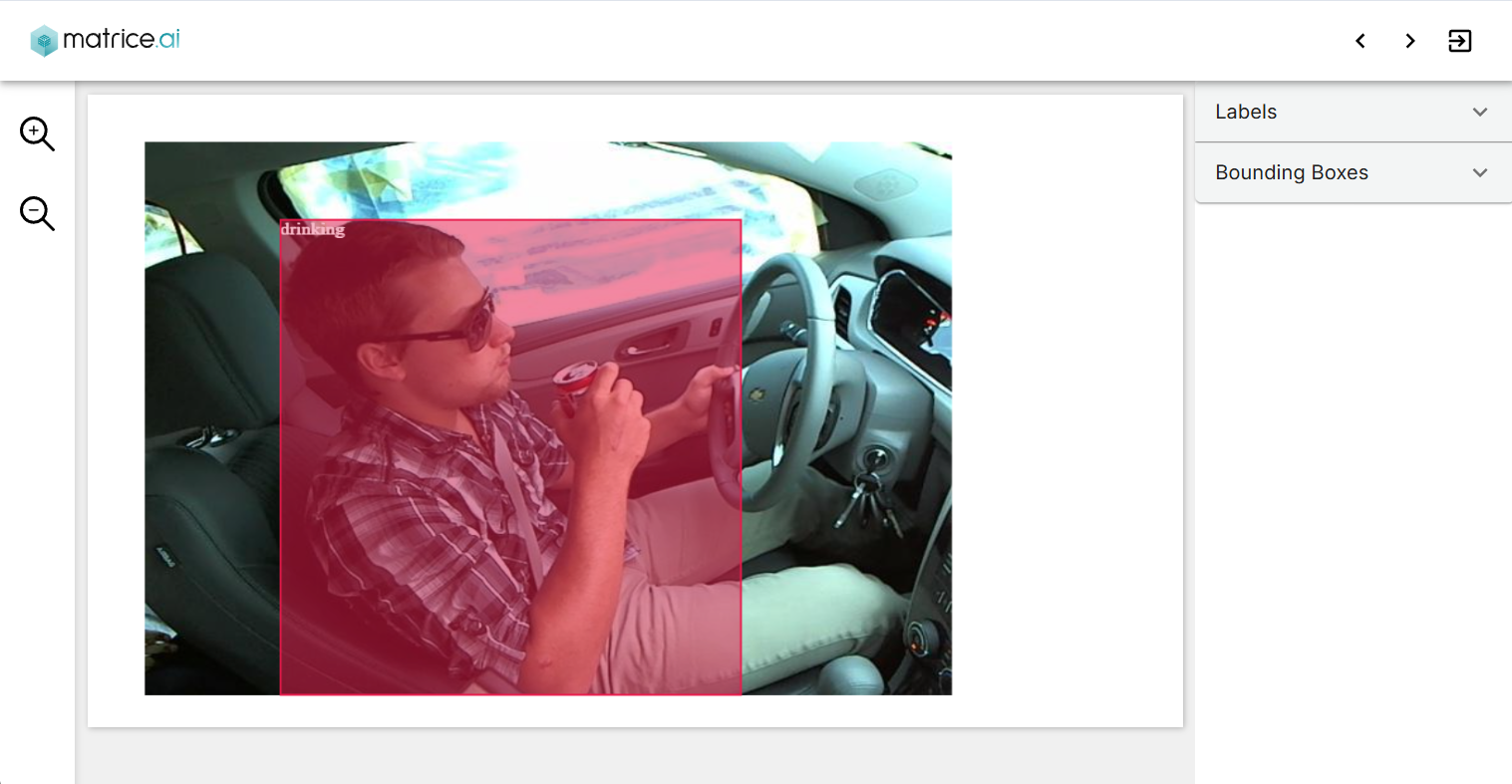

Dataset Annotation

Our dataset was pre-annotated, which saved us time in preparing labels for each category. The annotations were in MSCOCO format, which is compatible with our platform, allowing us to start training immediately. If you don’t have labeled data, we offer our ML-Assisted Labeling tool that can annotate your data for you.

3. Preview of Annotated Image

Model Training

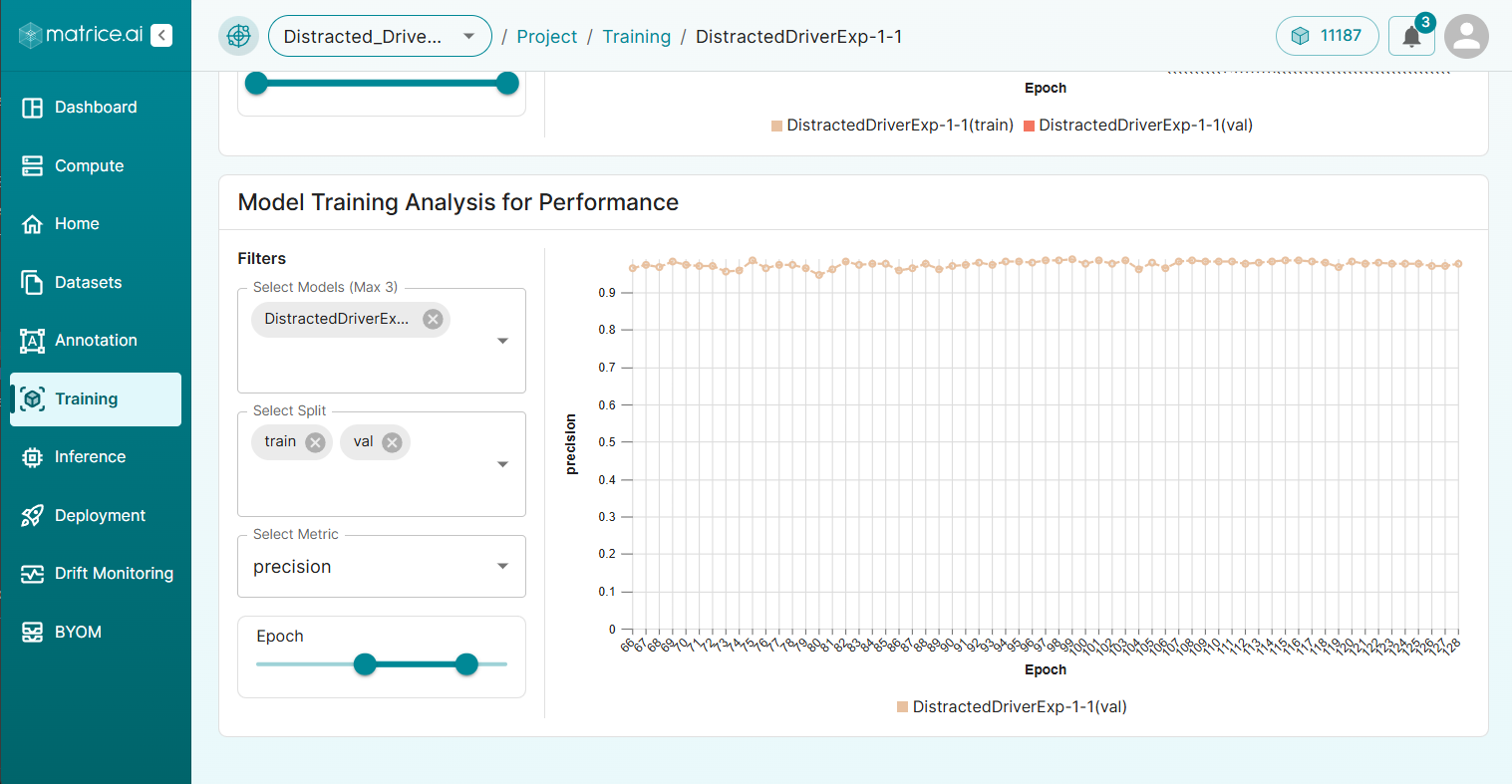

Using the YOLOv8 model, we conducted a series of experiments to find the optimal configuration for recognizing distracted driving behaviors. After testing various configurations, the following settings were found to give the best balance between precision and fitness:

Batch Size: 16

Epochs: 152

Learning Rate: 0.001

Momentum: 0.95

Weight Decay: 0.0005

4. Training Analysis for Model Performance

These hyperparameters helped our model achieve high precision and recall across different classes, ensuring accurate detection of both distracted and non-distracted driving actions.

Performance Metrics

The performance of our distracted driver detection model was evaluated on both the validation and test sets, providing the following metrics:

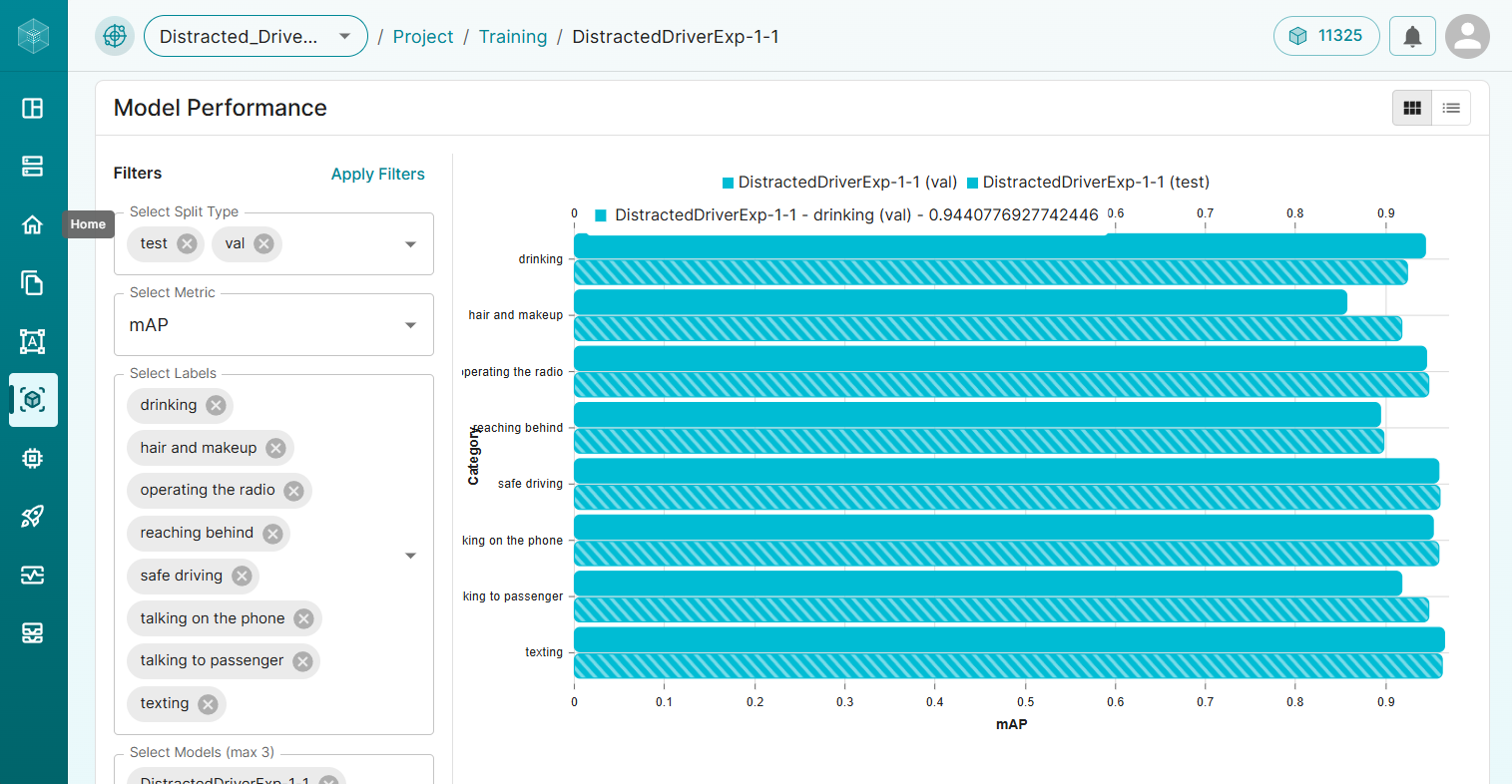

5. mAP for All the Classes

Category |

Precision |

Recall |

||

|---|---|---|---|---|

Validation |

Test |

Validation |

Test |

|

Drinking |

0.97 |

0.95 |

1.00 |

1.00 |

Hair and Makeup |

0.94 |

0.99 |

0.90 |

0.964 |

Operating the Radio |

0.99 |

0.98 |

1.00 |

1.00 |

Reaching Behind |

0.97 |

0.99 |

1.00 |

1.00 |

Safe Driving |

1.00 |

0.95 |

0.97 |

1.00 |

Talking on the Phone |

0.98 |

0.99 |

0.97 |

1.00 |

Talking to Passenger |

0.95 |

1.00 |

1.00 |

0.95 |

Texting |

1.00 |

0.99 |

0.97 |

1.00 |

As shown, our model achieved high precision and recall across most categories, especially for critical distractions like texting and talking on the phone, which are significant contributors to road accidents.

Model Inference

One of the standout features of our platform is the ability to export trained models to different formats, such as ONNX, TensorRT, and OpenVINO. This flexibility ensures the model can be deployed across various hardware configurations, from high-performance GPUs to lower-powered edge devices. For instance, a distracted driver detection model can be deployed on a low-powered device within a vehicle, making it accessible for on-road applications without requiring substantial computing resources. Alternatively, companies with extensive computing resources can deploy the model in formats optimized for high-performance environments.

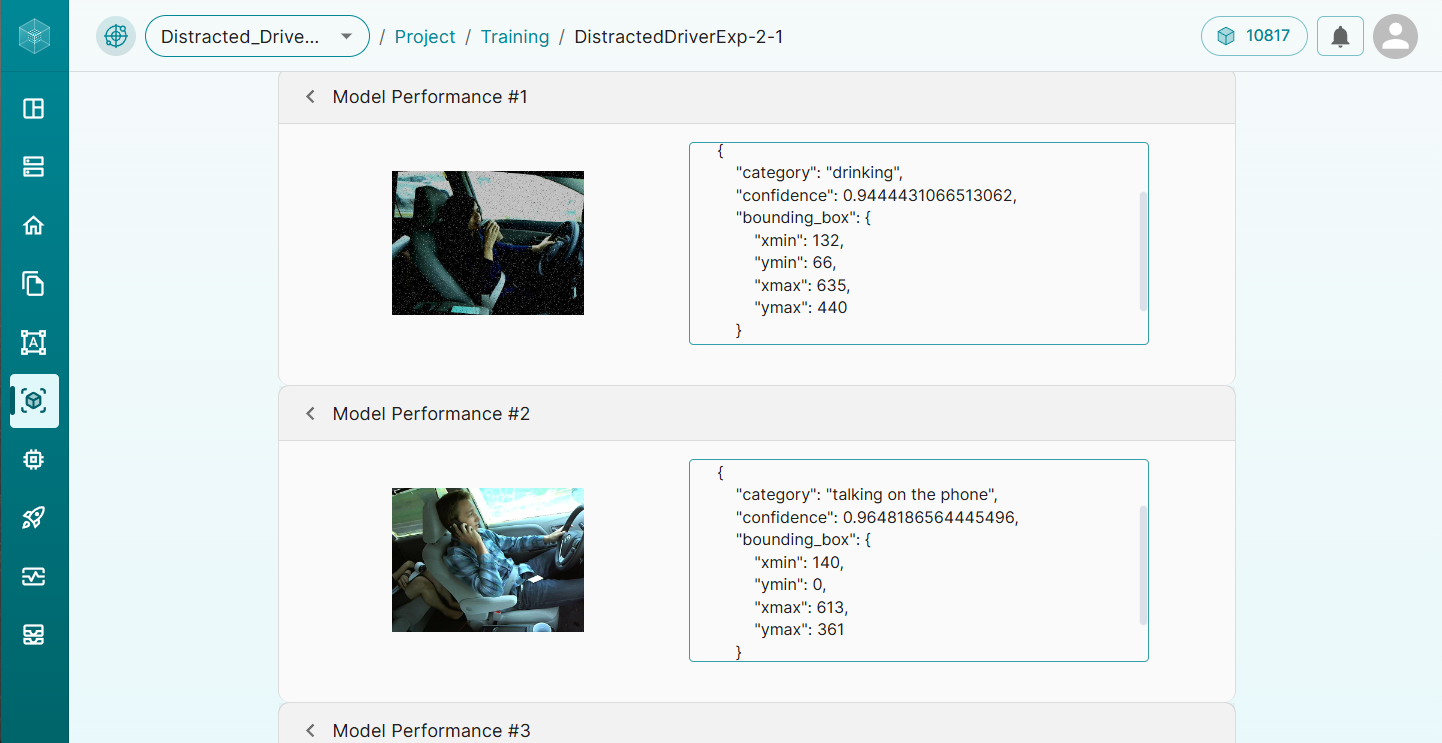

6. Example Preview of Model Test

Our platform provides an intuitive testing interface. Users can drag and drop images to see real-time model predictions, gaining quick insights into how the model performs across different distraction scenarios.

Model Deployment

With Matrice, deploying a model is seamless. Once the model is trained and evaluated, it can be deployed on our specialized servers, handling all computation on the backend. Users simply upload images, and predictions are returned almost instantly.

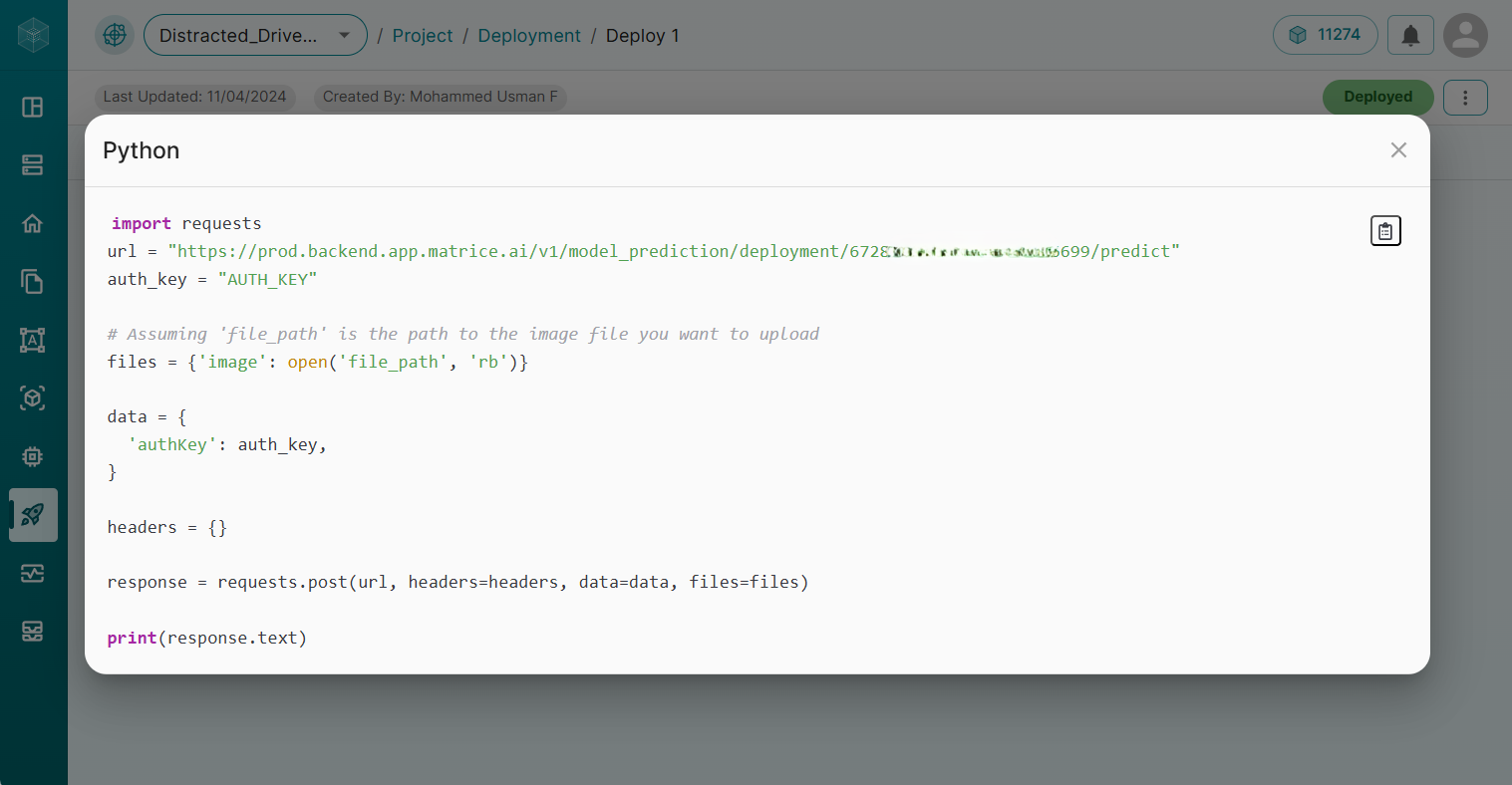

7. Example Code Preview for Python

The platform also provides API integration code for several programming languages, including Python, JavaScript, PHP, and Golang, ensuring flexibility for various applications. Whether integrating into a web service, mobile app, or custom software solution, Matrice makes deployment quick and efficient.

Real-World Applications

Our distracted driver detection model can be applied in various real-world scenarios to enhance road safety:

Real-Time Alerts: The model can be integrated into in-car systems to provide real-time alerts, notifying drivers when they are engaged in unsafe behaviors. This allows for prompt corrective actions to prevent potential accidents.

Fleet Monitoring: Companies can use this model in commercial fleet operations to monitor drivers’ behavior, ensuring adherence to safety protocols. Any instances of distraction can be logged and used for coaching sessions to improve driver safety.

Insurance Compliance: Insurance companies could leverage this technology to encourage safe driving practices by offering discounts to customers who maintain focus on the road, as detected by the model.

Conclusion

The rise of computer vision has opened new avenues for proactive road safety measures. By implementing a distracted driver detection model with Matrice’s no-code platform, companies, and organizations can effectively monitor and mitigate risky driving behaviors, ultimately reducing the likelihood of accidents.

From dataset preparation to model deployment, Matrice offers a streamlined solution for developing and implementing distracted driving detection systems. Start your journey today to create safer roads and promote attentive driving.

Think CV, Think Matrice

Experience 40% faster deployment and slash development costs by 80%