Healing with Vision: AI-Powered Wound Segmentation for Advanced Healthcare

Introduction

Chronic wounds represent a significant global health challenge, affecting millions of individuals and imposing a substantial burden on healthcare systems. Conditions such as pressure ulcers, diabetic foot ulcers, and venous leg ulcers can persist for months or even years, leading to severe pain, reduced mobility, increased risk of infection, and, in severe cases, limb amputation. The conventional assessment of wound healing often relies on subjective visual inspection and manual measurements, which can be inconsistent, time-consuming, and prone to human error. This subjectivity can lead to suboptimal treatment plans, delayed healing, and prolonged patient suffering.

Artificial Intelligence, particularly through advanced computer vision and precise image segmentation techniques, is poised to revolutionize wound care management. By enabling automated, objective, and accurate quantification of wound area and tissue composition from digital images, AI-powered wound segmentation promises to transform clinical assessment, personalize treatment strategies, and accelerate the healing process. This blog post explores our cutting-edge AI model designed for robust wound segmentation, highlighting its technical capabilities, profound clinical benefits, diverse applications, and the significant impact it promises for improving patient outcomes in wound care.

1. The Critical Need for Automated Wound Segmentation

The urgency for efficient and accurate wound segmentation stems from several critical factors, directly impacting patient well-being, clinical efficiency, and healthcare economics:

Objective Wound Assessment: Manual measurement of wound area and classification of tissue types (e.g., granulation, slough, necrotic) are often inconsistent between clinicians. Automated segmentation provides a precise, objective, and reproducible method for quantifying wound size and composition, crucial for tracking healing progression.

Personalized Treatment Planning: Accurate, quantitative data on wound characteristics allows clinicians to tailor treatment plans more effectively, selecting the most appropriate dressings, debridement methods, and therapies based on the wound’s specific state.

Early Detection of Complications: Changes in wound size or the appearance of non-viable tissue can indicate infection or delayed healing. AI can identify these critical changes objectively and proactively, allowing for timely intervention and preventing severe complications.

Reducing Healthcare Costs and Resource Strain: Prolonged wound healing leads to increased healthcare expenditure due to extended hospital stays, frequent clinic visits, and costly dressings. AI-driven efficiency can accelerate healing, reduce complications, and optimize resource allocation.

Enabling Telemedicine for Wound Care: For patients in remote areas or those with limited mobility, frequent in-person wound assessments can be challenging. AI-powered segmentation enables high-quality remote monitoring and consultation via digital images, democratizing access to specialized wound care.

By addressing these multifaceted challenges, AI-powered wound segmentation is not merely a technological enhancement; it’s a fundamental step towards more precise, personalized, and efficient wound care, ultimately leading to faster healing and improved quality of life for patients.

2. Benefits of AI in Wound Segmentation

AI-powered wound segmentation systems offer a multitude of transformative benefits that are reshaping clinical practice in wound care:

Precision and Consistency in Measurement: AI algorithms can delineate wound boundaries with pixel-level accuracy, providing highly consistent and reproducible measurements of wound area, circumference, and even volumetric changes over time. This eliminates human variability in assessment.

Objective Tissue Classification: Beyond just the wound boundary, AI can categorize different tissue types (e.g., healthy granulation, slough, eschar, infection signs) within the wound bed, offering a detailed, objective breakdown crucial for guiding debridement and treatment decisions.

Accelerated Healing Monitoring: Automated analysis enables frequent and rapid assessment of healing progression, allowing clinicians to quickly identify if a treatment plan is effective or if adjustments are needed, thus potentially accelerating the healing process.

Reduced Infection Risk through Early Detection: Subtle changes in wound appearance, often indicative of early infection, can be difficult to spot manually. AI can be trained to recognize these nuanced patterns, prompting timely intervention and reducing the risk of severe infections.

Facilitating Telemedicine and Remote Monitoring: Patients or caregivers can capture digital images of wounds using standard cameras, which can then be analyzed by AI for remote assessment. This is invaluable for patients who struggle with mobility or live far from specialized clinics.

Optimizing Clinical Workflow and Resource Allocation: Automating wound assessment frees up valuable nursing and clinical staff time, allowing them to focus more on direct patient care, education, and complex cases. This leads to more efficient clinic operations.

3. Data Preparation for Robust AI

The success of our wound segmentation model is directly attributable to the meticulous preparation of a diverse and high-quality dataset. This process involved collecting and annotating vast quantities of wound images, encompassing a wide range of types, severities, and clinical presentations. Key aspects of our data preparation strategy included:

Diverse Wound Types and Etiologies: The dataset included images of various wound types (e.g., pressure injuries, diabetic foot ulcers, venous ulcers, surgical wounds, burns), ensuring the model’s adaptability across different clinical contexts.

Comprehensive Tissue Composition: Images featured wounds with varying proportions of different tissue types (granulation, epithelialization, slough, necrotic tissue, hypergranulation) to train the model for detailed tissue classification.

Varying Lighting, Angles, and Patient Skin Tones: Images were captured under diverse lighting conditions, camera angles, and across a spectrum of patient skin tones to ensure the model’s robustness and generalization to real-world clinical photography.

Presence of Periwound Skin and Dressings: The dataset included images with surrounding healthy skin, periwound characteristics (e.g., erythema, maceration), and sometimes partial presence of dressings or accessories, training the model to distinguish wound boundaries accurately.

Pixel-Level Annotation: Highly experienced wound care specialists meticulously annotated the boundaries of the wounds and delineated different tissue types at the pixel level. This precise and detailed labeling served as the indispensable ground truth for supervised learning.

Rigorous Quality Control and Augmentation: Annotations underwent strict quality checks, and data augmentation techniques (e.g., rotation, scaling, brightness adjustments, blurring) were applied to artificially expand the dataset’s diversity and enhance the model’s robustness.

Model Architecture

The foundation of our advanced wound segmentation system is the YOLOv9m architecture. While YOLO (You Only Look Once) is widely recognized for its speed in object detection, its capabilities have been extended to perform instance segmentation. This allows for pixel-level delineation of objects, making it an ideal choice for the intricate task of precisely outlining wound areas and their internal tissue components. The YOLOv9m variant strikes an optimal balance between high segmentation accuracy and impressive inference speed, which is crucial for real-time clinical assessment.

Key advantages of YOLOv9m in the context of wound segmentation include:

Real-Time Segmentation: YOLOv9m’s highly optimized design allows for extremely fast analysis of wound images, enabling near-instantaneous generation of wound maps and tissue composition analysis, crucial for point-of-care decisions.

Precise Boundary Delineation: The model excels at accurately segmenting the irregular and often complex boundaries of wounds, distinguishing them from surrounding healthy or compromised skin at a pixel level.

Robustness to Visual Variability: Its sophisticated deep learning layers are highly effective at extracting intricate features from wound images affected by varying light conditions, presence of exudate, or different skin tones, ensuring reliable performance in diverse clinical settings.

Efficient Mask Generation: Beyond just identifying a wound, YOLOv9m generates detailed pixel-level masks for each identified wound area and potentially sub-regions of tissue types, providing the precise output necessary for quantitative analysis.

Training Parameters

The model underwent extensive training to optimize its performance across the diverse dataset. The key training parameters were carefully selected to ensure stability, rapid convergence, and robust generalization to new, unseen wound images:

Parameter |

Value |

Description |

|---|---|---|

Base Model |

YOLOv9m |

The foundational deep learning architecture employed for the task, known for its efficiency and accuracy in instance segmentation. |

Batch Size |

8 |

Number of samples processed before the model’s internal parameters are updated, balancing training stability and computational efficiency. |

Learning Rate |

0.0005 |

Controls the step size during the optimization process, a conservative rate chosen for stable convergence and fine-tuning. |

Epochs |

70 |

Number of complete passes through the entire training dataset, ensuring the model learns extensively from the data and generalizes well. |

Optimizer |

AdamW |

An adaptive learning rate optimization algorithm (Adam with decoupled weight decay) known for its efficiency and strong performance in deep learning tasks. |

Inference Time |

~0.4s |

The average time taken for the trained model to process a single wound image and generate a segmentation mask. |

Model Evaluation

Our rigorous training and validation processes have yielded a model with robust capabilities for wound segmentation. The evaluation metrics below demonstrate the model’s precision, recall, and overall segmentation accuracy, proving its reliability for critical applications in wound care management.

Metric |

Overall Performance |

Wound Area |

Healthy Tissue/Background |

|---|---|---|---|

Precision |

0.74 |

0.76 |

0.72 |

Recall |

0.75 |

0.78 |

0.73 |

F1 Score |

0.75 |

0.77 |

0.72 |

mAP |

0.70 |

0.72 |

0.68 |

Inference Time |

~0.4s |

- |

- |

Precision (0.74 Overall): This indicates that when our model identifies pixels as belonging to a wound, it is correct 74% of the time, minimizing false positives and ensuring focused treatment areas.

Recall (0.75 Overall): With a recall of 75%, the model successfully identifies most of the actual wound pixels in an image. This is crucial for comprehensive assessment and ensuring no parts of the wound are missed.

F1 Score (0.75 Overall): The F1 Score, a harmonic mean of precision and recall, provides a balanced measure of the model’s overall accuracy in segmentation, reflecting its robust performance in identifying and correctly outlining wound areas.

Mean Average Precision (mAP) (0.70 Overall): As a comprehensive metric for instance segmentation tasks, mAP of 0.70 signifies strong overall performance across both “Wound Area” and “Healthy Tissue/Background” classes, indicating reliable accuracy in generating precise masks for wound assessment.

Inference Time (~0.4s): The sub-second inference time ensures that wound segmentation can be generated almost instantaneously, making the system highly practical for real-time clinical assessment and telemedicine applications.

The per-category metrics provide further insight: “Wound Area” shows slightly higher recall, prioritizing the capture of the full wound, while “Healthy Tissue/Background” demonstrates good precision, ensuring clear differentiation from the wound.

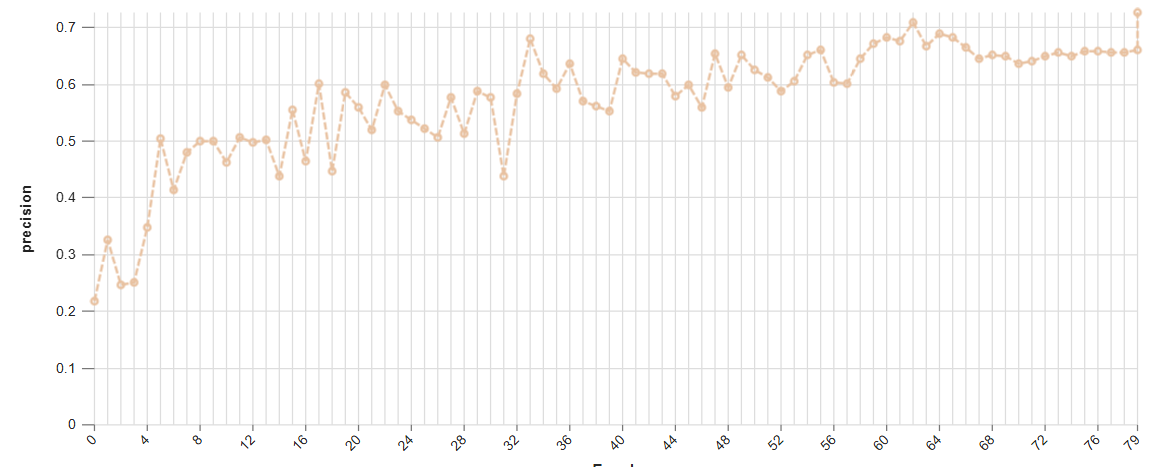

Epoch vs. Precision during Training

To demonstrate the training dynamics and performance stability of our model, the following graph illustrates the progression of precision over epochs. This visualization highlights how the model refined its accuracy during the training process, converging towards its optimal performance.

This graph shows the increase in model precision as training progresses over multiple epochs, demonstrating the learning curve and stability of the YOLOv9m architecture in learning wound characteristics.

This graph shows the increase in model precision as training progresses over multiple epochs, demonstrating the learning curve and stability of the YOLOv9m architecture in learning wound characteristics.

Model Inference Examples

Below are conceptual examples demonstrating the model’s output when analyzing wound images for precise segmentation. The AI accurately identifies and outlines the wound area, often highlighting different tissue types within the wound bed.

Example 1: Precise Segmentation of a Chronic Wound

This image showcases our AI model in action, accurately segmenting the boundaries of a wound, distinguishing it from surrounding healthy tissue. This precise mask provides quantitative data for monitoring healing progression.

This image showcases our AI model in action, accurately segmenting the boundaries of a wound, distinguishing it from surrounding healthy tissue. This precise mask provides quantitative data for monitoring healing progression.

4. Real-World Applications and Societal Impact

The deployment of this AI-powered wound segmentation system is poised to create a profound impact across various healthcare settings and patient care pathways:

Telemedicine and Remote Wound Care: Enables patients to capture images of their wounds at home for remote assessment by clinicians, improving access to specialized care, particularly for those with limited mobility or in rural areas.

Automated Wound Measurement and Tracking: Provides objective, consistent, and automatic calculation of wound area and volume over time, eliminating manual errors and facilitating precise monitoring of healing progression or deterioration.

Clinical Decision Support: Offers clinicians quantitative data and visual maps of wound characteristics (e.g., percentage of granulation, slough, necrotic tissue) to aid in selecting the most appropriate dressings, debridement methods, and treatment protocols.

Early Detection of Infection and Complications: AI can be trained to recognize subtle changes in wound appearance that might indicate impending infection or other complications, prompting early intervention and preventing severe outcomes.

Research and Clinical Trials: Provides a standardized and automated method for collecting high-quality wound data for clinical trials, accelerating research into new therapies and improving statistical power.

Optimizing Supply Chain for Dressings: Accurate wound sizing data can help optimize the ordering and use of specialized wound care products, reducing waste and improving cost-effectiveness in healthcare facilities.

5. Future Directions in AI-Powered Wound Segmentation

Our commitment to innovation ensures continuous development and enhancement of our AI capabilities in wound care. Future efforts will focus on:

3D Volumetric Wound Analysis: Integrating segmentation with 3D imaging techniques (e.g., from structured light scanners or multi-view images) to provide accurate volumetric measurements of wounds, crucial for complex cases.

Multi-Spectral Imaging for Tissue Characterization: Utilizing multi-spectral or hyperspectral cameras to capture more detailed information about tissue oxygenation, blood flow, and bacterial presence, enabling more nuanced wound assessment.

Predictive Modeling of Healing Outcomes: Developing AI models that can predict the likelihood of wound healing within a certain timeframe or identify patients at risk of non-healing, based on segmented data and clinical factors.

Personalized Dressing and Treatment Recommendations: AI systems that can recommend specific dressings or interventions based on the precise tissue composition and characteristics identified through segmentation.

Integration with Wearable Sensors: Combining image-based segmentation with data from wearable sensors (e.g., for temperature, pH) to provide a holistic view of the wound environment and healing progress.

Conclusion

AI-powered wound segmentation represents a transformative leap forward in modern healthcare. By delivering unparalleled objectivity, precision, and efficiency in wound assessment, our solution empowers clinicians to provide more personalized, effective, and timely care, ultimately accelerating healing and significantly improving the quality of life for patients suffering from chronic wounds. As we continue to refine and expand these capabilities, the future promises an even more intelligent, data-driven, and compassionate approach to wound care management.